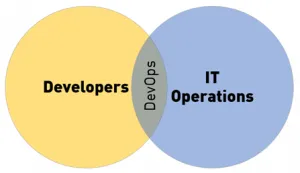

Earlier, we discussed the friction that exists between the developers and IT operations teams. This conflict primarily exists because of the motivations of each team. Developers generate change and expect it to be rolled out to production in the shortest possible time. The operations team wants to avoid the change to ensure stability of the production systems. This conflict between “Need for change” and “Fear of change” results in isolated silos.

DevOps phenomenon happened because history repeats itself. During 70s, there was no clear difference between developers and administrators. Every “computer professional” working on the mainframes dealt with all the operations. She had to write code, mount the tapes, flip the switches on the panel and even replace the burnt vacuum tubes. Things changed with the advent of mini computers and then the PCs followed by the client / server architecture. Computer professionals got classified into users, programmers and operators. Users expected everything to work magically while the programmers were churning out code. Programmers distanced themselves from the grunt work of crimping cables, installing software and managing the networking gear. The operators slogged in a cold, dingy corner called the server room. With time and the exponential growth of internet, users became more sophisticated with increased expectations, programmers got overwhelmed with the growing complexity of code, servers rooms transformed into expansive datacenters and the operators became responsible for supporting both developers and end users. Fast forward to the current era of cloud, the line between developers and operators started to blur resembling the mainframe era. Today, developers write code to provision servers, load balancers and firewalls while IT administrators are turning into developers writing code that automates the operations. Welcome to the world of DevOps where infrastructure is code!

On a lighter note, below is a Tweet from @devops_borat that helps us differentiate between the devs and ops.

So, what exactly is DevOps? DevOps is the methodology where developers and operations teams collaborate, communicate and work together to ensure rapid delivery of software.

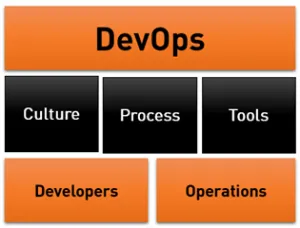

Did you notice the word methodology in the definition? This has a deeper context than what we think. DevOps is not a new technology or framework that can be induced through a conventional training and skills transfer program. DevOps is not a new job function where an individual or a team becomes responsible for the implementation. DevOps is not a business process that can be outsourced to external consultants.

Like most of the proven methodologies, DevOps has three essential pillars –

Culture – This is the most important aspect of embracing DevOps. Organizations need to create a culture that reflects the principles of DevOps. This culture is all about communication and collaboration among the developers, testers and the IT Ops team. Many problems will be addressed with the developers adopting the agile methodology. The IT Ops team should follow the new IT services model that will enable reduction in management overhead and delivers better value for both internal and external customers. The CIO/CTO organizations are responsible for driving the cultural transformation initiative across the company. DevOps is nothing without the discipline and the culture.

Process – Every organization will have a unique way of writing code, testing it and releasing it. DevOps stands on the pillar of well-defined process. It is no less complex than a workflow of a business process aligned with an ERP. The process clearly defines everything that takes place from the time a developer checks in his code into the source code control till it surfaces on the production system ready to be consumed by the end users. While this may look deceptively simple, it involves complex orchestration of various functions. It addresses the way the code is built, tested and released. It cuts across the development teams, testing teams, operations, beta customers and end users. DevOps is not a disruptive process. It doesn’t force change that may be resisted by the teams. Instead, DevOps brings inclusiveness by aligning existing processes, tools and teams with the common vision of delivering reliable software more rapidly.

Tools – There are a set of tools to realize the promise of DevOps. Some of them are time tested and are in use for decades while some tools are created keeping this methodology in mind. Every organization that is moving towards DevOps should document the inventory of disparate tools used by the developers, project managers, testers and IT Ops. This ranges from simple version control systems like git and SVN to build tools like Ant and Maven, testing tools like LoadRunner, JMeter and Selenium, provisioning tools like Vagrant, configuration management tools like Chef and Puppet and monitoring tools like Nagios, Ganglia and finally trouble ticketing tools like Jira. These tools are designed to work together as they target different groups with different outcomes. Based on the defined process of DevOps as identified by the CIO/CTO, these tools need to be connected together. This is where orchestration engines like Jenkins play a critical role. By creating a well-defined orchestration aligned with the process, these disparate tools will work together to enable continuous delivery of software. But, it is extremely important to understand that organizations should resist the temptation of investing in the tools without a blueprint for the process and the culture of DevOps. Tools alone without the process and culture cannot deliver the promise of DevOps.

We have thus looked at DevOps as an essential methodology for the software development organizations. I will cover the aspects of culture, process and tools in detail in the future posts. Stay tuned!