AI Terminologies 101: Explainable AI - The Key to Trustworthy AI Systems

Discover the power of Explainable AI in this AI Terminologies 101 guide, detailing the methods and techniques used to make AI systems more understandable, transparent, and trustworthy.

Explainable AI refers to the development of artificial intelligence systems that can provide clear and understandable explanations for their decisions, actions, and reasoning. As AI technologies become more prevalent in our lives, the need for transparency and trust in these systems becomes increasingly important. In this article, we will explore the concept of Explainable AI, its underlying principles, and its applications in various domains.

Explainable AI is an emerging field within artificial intelligence that focuses on creating models and algorithms that can be easily understood by humans. The goal is to bridge the gap between the complex inner workings of AI systems and the human users who interact with them, enabling people to trust and effectively use AI technologies.

There are several approaches to achieving Explainable AI, including:

- Interpretable Models: Developing AI models that are inherently interpretable, such as decision trees or linear regression models, allows users to understand the relationships between input variables and the model's output.

- Post-Hoc Explanations: Creating explanations for the decisions made by complex AI models, such as deep neural networks or support vector machines, after they have been trained. This can involve techniques like Local Interpretable Model-agnostic Explanations (LIME) or Shapley values.

- Visualization Techniques: Using visual representations to help users understand the inner workings of AI models, such as t-distributed Stochastic Neighbour Embedding (t-SNE) for visualising high-dimensional data or activation maps for convolutional neural networks.

Explainable AI has numerous applications across various domains, particularly in areas where trust and transparency are essential. In healthcare, Explainable AI can help doctors and patients understand the reasoning behind AI-generated diagnoses or treatment recommendations. In finance, it can help regulators and users understand the decision-making process of AI-driven trading systems or credit scoring models. In autonomous vehicles, Explainable AI can help build trust in the decision-making capabilities of self-driving cars.

Explainable AI is an essential aspect of artificial intelligence, especially as AI technologies become more integrated into our daily lives. By developing AI systems that are transparent, understandable, and trustworthy, we can ensure that these technologies are used ethically and responsibly.

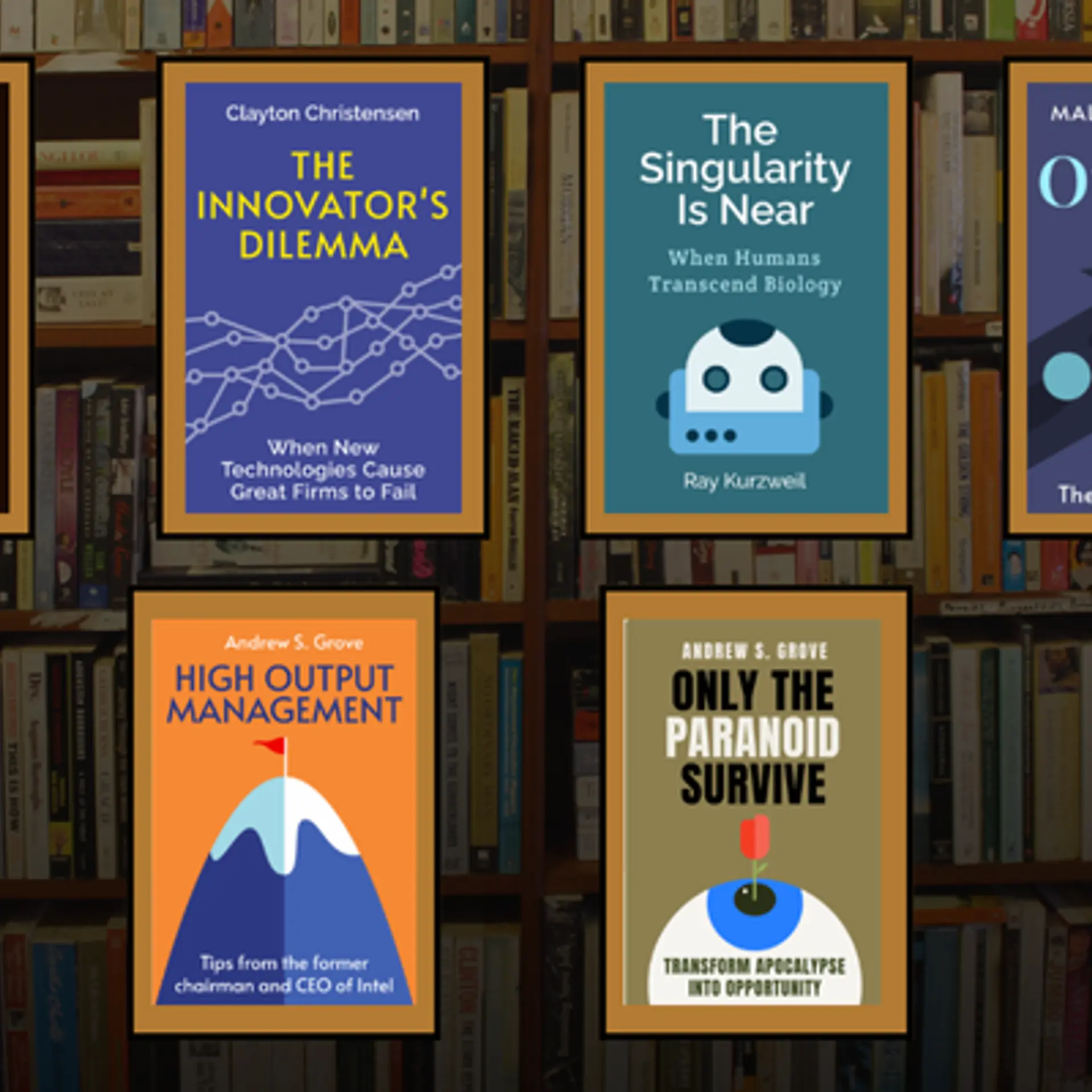

In future articles, we'll dive deeper into other AI terminologies, like Multi-Agent Systems, Transfer Learning, and Conversational AI. We'll explain what they are, how they work, and why they're important. By the end of this series, you'll have a solid understanding of the key concepts and ideas behind AI, and you'll be well-equipped to explore this exciting field further.