Google Cloud Platform for AWS Professionals - Part 2

In part 1, we looked at the building blocks of AWS and GCP. This part of the series will introduce the compute service by comparing Amazon EC2 and Google Compute Engine (GCE).

Signing up and getting started

Both AWS and GCP allow us to create an account without entering the credit card details. However, if you want to consume the services, you need to validate your credit card.

Just like the way a valid AWS account provides access to various services, you need to create a project in GCP to gain access to the services. You can use your Gmail account to create the project. Project needs to be billing-enabled before you can launch your first instance. Google tracks the usage of resources per project and bills you accordingly. Each project has a unique id that is used by various tools. So, a project is the unit of billing under the GCP.

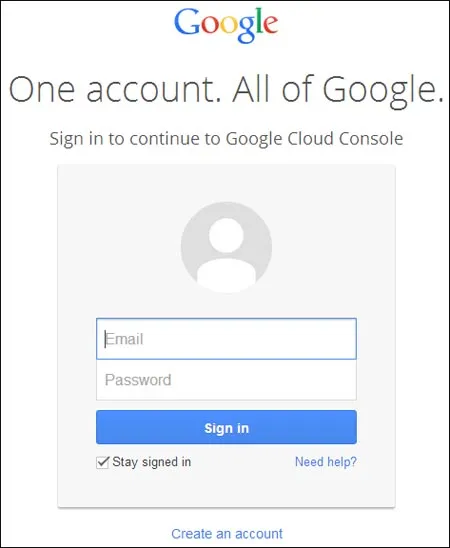

Accessing the platform

Like AWS, GCP is accessible from multiple channels. You can login to the Google Developers Console, which is the web based interface, using the Command Line Interface (CLI) or consume the API directly if you are a developer.

Google Developers Console is a one-stop shop for dealing with GCP. You can access App Engine, Compute Engine, Cloud Storage, Cloud Datastore, Cloud SQL, BigQuery from the same user interface. This is comparable to the AWS Management Console.

If you need more power, the command line tools are the best choice. Like AWS CLI, GCP CLI is also written in Python. It is a part of the Google Cloud SDK which can be downloaded from https://dl.google.com/dl/cloudsdk/release/google-cloud-sdk.zip. You will mostly use gcloud, gcutil and gsutil commands to manage your deployments. gcutil has multiple options to manipulate the VMs, networks, persistent disks and other entities of GCE.

Finally, for the developers, the REST interface of GCP offers the power and control. Every operation performed either through the console or the CLI calls the same interface.

Understanding the scope of resources

If you are an experienced AWS professional, you know that each resource that is created within AWS has a specific scope. At a high level, AWS scope is defined by the region and availability zone. By default, every resource that you create within AWS belongs to a specific region. This includes the Amazon Machine Image (AMI), Elastic IP (EIP), Elastic Load Balancer (ELB), Security Group, Virtual Private Cloud (VPC), EBS Snapshots and so on. There are certain resources that are confined to specific availability zones. Resources like EC2 Instances and Elastic Block Store (EBS) are examples of this. If you want to share resources across regions, you have to explicitly initiate a copy of the AMI or the EBS Snapshot. However, this will create independent resources that need to be maintained separately. That means, every time you update the golden AMI, you have to manually copy it to the destination region(s). This results in “AMI hell” where you have to manage multiple copies of the same AMI across regions. For a multi-tier application that has at least 3 golden AMIs running across two regions, you will end up managing six images. The other important factor is the bandwidth cost involved in cross-region data transfer. AWS charges you for the outbound traffic even if it is between AWS regions.

Imagine the ability to create AMIs that are not confined to a specific region. This makes it easy to manage images centrally. You can maintain one golden AMI and launch it in any region. This feature has always been high on the AWS customer wish-list, which Amazon didn’t address so far.

This is where GCE shines! By introducing the global scope, Google made it possible to create resources that are not specific to a region or a zone. Apart from region and zone, GCE explicitly calls out the global scope. Resources like images, snapshots, networks and routes are global. So, an image that you created as a part of your project can be launched in US Central or Europe West without performing the manual copy. Google carefully analyzed the pain points of AWS customers and added those features that appeal to them.

Images

OS images in GCE are similar to AMIs of AWS. Each image is a Persistent Disk (PD) that contains a bootable operating system and a root file system. Persistent disks are comparable to Amazon EBS. I will be comparing EBS with PD in the later part of this article. As discussed in the previous section, images are specific to a project and are not confined to a region. As of 1/1/2014, GCE supports Debian and CentOS images with Red Hat Enterprise Linux and SUSE Linux Enterprise Server available in preview mode.

After you launch an instance from an existing image and customize it, you can bundle it to create a custom image. Though this is not integrated with the console, you can use the CLI or SDK to perform the bundle operation. Bundling involves launching an instance, customizing it, creating a new image, uploading it to Google Cloud Storage and registering it with GCE. Once registered with the global images collection, it can be used to launch multiple instances. This process closely resembles the bundling of EC2 AMIs before its integration with the AWS Management Console.

Machine Types

Like Amazon EC2, GCE also has multiple types of machines and configurations.

There are 4 machine types:

- Standard Machine Type – This is the generic family that is ideal for most of the general-purpose workloads. This is comparable to the M1/M3 family of Amazon EC2 instance types.

- Shared-Core Machine Type – This is ideal for applications that don't require a lot of resources. With short period bursting capabilities, this machine type is comparable to T1 family of Amazon EC2.

- High Memory Machine Type – This machine type is designed to run OLTP databases, memory caches and in-memory databases that demand more memory relative to the CPU. M2 and the latest CR1 family of Amazon EC2 offer similar capability.

- High CPU Machine Type – This type of machines are used for CPU intensive workloads that need more cores relative to memory. Video encoding, image processing and high-traffic websites are the typical workloads for this machine type. C1, CC2 and the latest C3 family of Amazon EC2 are comparable to this type.

If AWS calls it unit of computing as Elastic Compute Unit (ECU), GCE measures the same in Google Compute Engine Unit (GCEU). According to the official documentation, GCEU (Google Compute Engine Unit), pronounced GQ, is a unit of CPU capacity that is used to describe the compute power of the instance types. Google chose 2.75 GCEUs to represent the minimum power of one logical core (a hardware hyper-thread) on their Sandy Bridge platform. For a long time, AWS used a similar explanation to describe ECU. But after the introduction of C3 and M2 types, AWS removed all the references to the definition of 1 ECU is equivalent to the CPU capacity of 1.0-1.2 GHz Intel Xeon or Opteron processor. Even the FAQ section of ECU doesn’t have the original definition.

In the next part of this series, I will cover the advanced concepts of Google Compute Engine and compare it with Amazon EC2. Stay tuned!