How to harness AI and ML in the world of testing to optimise time to market: ThoughtWorks team shares their take

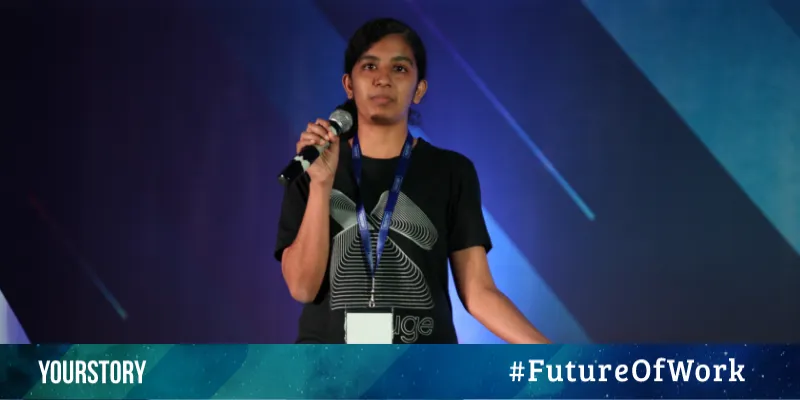

From static websites and dynamic forms to AI-based software, ThoughtWorks’ product journey has evolved with the advances in technology. At YourStory’s Future of Work Conference 2019, Fathima Harris, Senior Quality Analyst, ThoughtWorks, and Tina Thomas, Developer, ThoughtWorks, took to the stage to share how their organisation is leveraging AI and ML to improve product testing.

Tina Thomas, Developer, ThoughtWorks

“Today you have virtual personal assistants that are smart enough to listen to you talk and figure out what you want them to do,” said Tina. ThoughtWorks focuses on building recommendations based on previous preferences so that users get suggestions for products that they are most likely to buy. They also have smart face detection systems and fraud detection systems being used by crime investigators.

While a number of products in the market are AI-based, the question is how using AI and ML would tie back into the company’s delivery processes. “AI and ML will give you intelligent inferences that would take a lot more time if a human was trying to do it,” Tina said. These emerging technologies also provide faster feedback, reduce manual intervention and inherently register the entire delivery time.

Fathima spoke about their delivery cycles which have different phases. The first is analysis and planning where they gather requirements and analyse what needs to be built. Then they decide on the UI/UX, following which they get the software in working condition after putting together all the different components and testing them. She added that the cycle is an iterative process where AI and ML are applied in every phase.

Fathima Harris, Senior Quality Analyst, ThoughtWorks

The talk focused on the challenges one faces during the testing phase and how AI and ML based tools could help. Some common challenges include the need to manually write the scripts even when attempting automation of the test, and vice versa. “Even if you put all hands on deck, have your scripts sorted and automated them, there is still a chance of the test failing and you spend a lot of time figuring what went wrong either with the application or the test,” said Fathima.

In most cases, the tests are unstable, and a significant amount of time is spent in root cause analysis. But there are several tools like Testim, AppliTools Eyes, ReportPortal.io, ZAP, Functionize, Test.ai, Sealights, AppVance, and Retest, that can solve this problem.

AI/ML tools that simplify testing

Testim

Testim is a tool that helps you author your test quickly. It’s a simple record and playback process, but Testim is different from other tools. It has a learning capability that understands the changes your application has gone through, which includes the coaching the developer has made. It records your actions and converts them into a test. It develops the capability to understand how you would react to failure. So, you don’t have to invest your time in finding a locator to create a test as the tool takes care of it, you can focus on designing and organising your test.

When Testim records your test, it takes a snapshot. It doesn’t just look for an element like class or ID, it finds all the locators associated with that element, assigns a score to it and sorts it rank wise. In the event of a change, if it can’t find the highest locator, it will fall back on the second highest one. You don’t have to manually figure out why it failed, the tool takes care of that.

ReportPortal.io

It’s a learning tool that analyses failures along with you. Depending on how you react to the tests, it will try and understand what is critical and what is not, so that it can flag necessary tests. When automated tests are running and they fail, you expect the tool to ensure that it is catching failures. But there are certain scenarios where your test may fail due to an unstable environment, and that’s when you need to check manually. ReportPortal.io reduces manual intervention by using ML algorithms to analyse the failures. “These are supervised learning algorithms, which means that you have to give your algorithm trained data so that it can make inferences from that,” said Tina.

She took the example of a spam filter, in order for it to understand whether a piece of mail is spam or not, you have to first teach it what is spam and what is not. Similarly, you have to train ReportPortal.io to identify different kinds of failures.

You can use your existing test framework and easily integrate it into this tool. With just a key and a token, it will send information back to your dashboard. It keeps track of the logs and screenshots and when it stars seeing errors, it flags them and asks for user interaction and details on the kind of log. Once trained, the tool can flag failures by itself.

Functionize

This tool is similar to Testim wherein it can self-heal. In addition to doing recording and playback and adding scripts, it has two interesting features. The first is that you can describe your tests in plain English. Built-in NLP capabilities allow it to understand what you’re trying to say. Second, in addition to the test that you already have, this tool keeps track of actual user behaviour and sends back all this information to the portal. Then it tries to cluster these user behaviours into categories. Based on these categories, it builds its own tests.

Apart from these three tools, there are others like Sealights which provide a suite of products and tools which start right from the development phase and tells you if an area of untested code is product-ready or not. Even if you’re making a change in the existing functionality, it will analyse and detect the regression part and tell you areas you’ve made changes in and how these areas could be impacted. AppliTools Eyes is another tool that intelligently understands that it needs to raise alarms only when something actually changes.

The speakers advised that before we get caught up with how cool these tools are, it’s important to remember what our goal really is. “Our goal is to deliver quality software in as minimal time to market as possible,” they said. One of the capabilities that these emerging technologies offer is user behaviour test generation. This is as close a test can be to a real user flow. These tests also evolve and heal themselves because they are intelligent enough to do root cause analysis.

On a concluding note, they said that it’s vital to understand the challenges that different projects and teams might be facing. Organisations need to identify the biggest challenge and look for solutions to address it. “We need to embrace AI and ML tools to increase productivity and optimise time to market. Now that our time has been saved by these tools, we can focus on how to take quality to the next step,” said Fathima.

A big shout out to Future of Work 2019 sponsors – Deployment partner Harness.io, Super partner GO-JEK, our Women-in-Tech partner ThoughtWorks, Voice Tech partner Slang Labs, Technology partner Techl33t, AI/ML partner Agara Labs, API Partner Postman and Blockchain partner Koinex.