[Product Roadmap] Building an interactive display solution, how Cybernetyx touched 15M users across 80 countries

A product roadmap clarifies the why, what, and how behind what a tech startup is building. This week, we feature Cybernetyx, the Bengaluru-based interactive display solution startup.

While the world has moved deeper into technology and the digital gamut, there is little change in the way we interact with computers and technology. Surprisingly, computers today are still being designed to take inputs from joystick-like dated technology tools such as keyboard and mouse, which are several decades old.

Nishant Rajawat was a research scholar working under the guidance of late Prof. AN Venetsanopoulos at the University of Toronto in 2006-07, when he successfully developed an intelligent computer vision technology that could track human activity and intent in a 3D space using a standard 2D camera.

Later in 2009, as a student in IIM Shillong, he was faced with using large sized displays with the usual keyboard and mouse in classrooms and lecture halls. This prompted him to imagine a new way to interact with screens and data by imparting visual capabilities similar to human eyes and visual cortex to our computers.

Based on his previous research, the team built a quick prototype of a portable optical sensor that can connect with any computer and give it the capability to sense human gestures and intent over any surface.

In the same year, was founded in India and started building the first product, with Nandan Dubey as the CTO. The following year, co-founder Eduard Metzger joined in to form Cybernetyx Germany in Rinteln and they launched their first software development centre in the country.

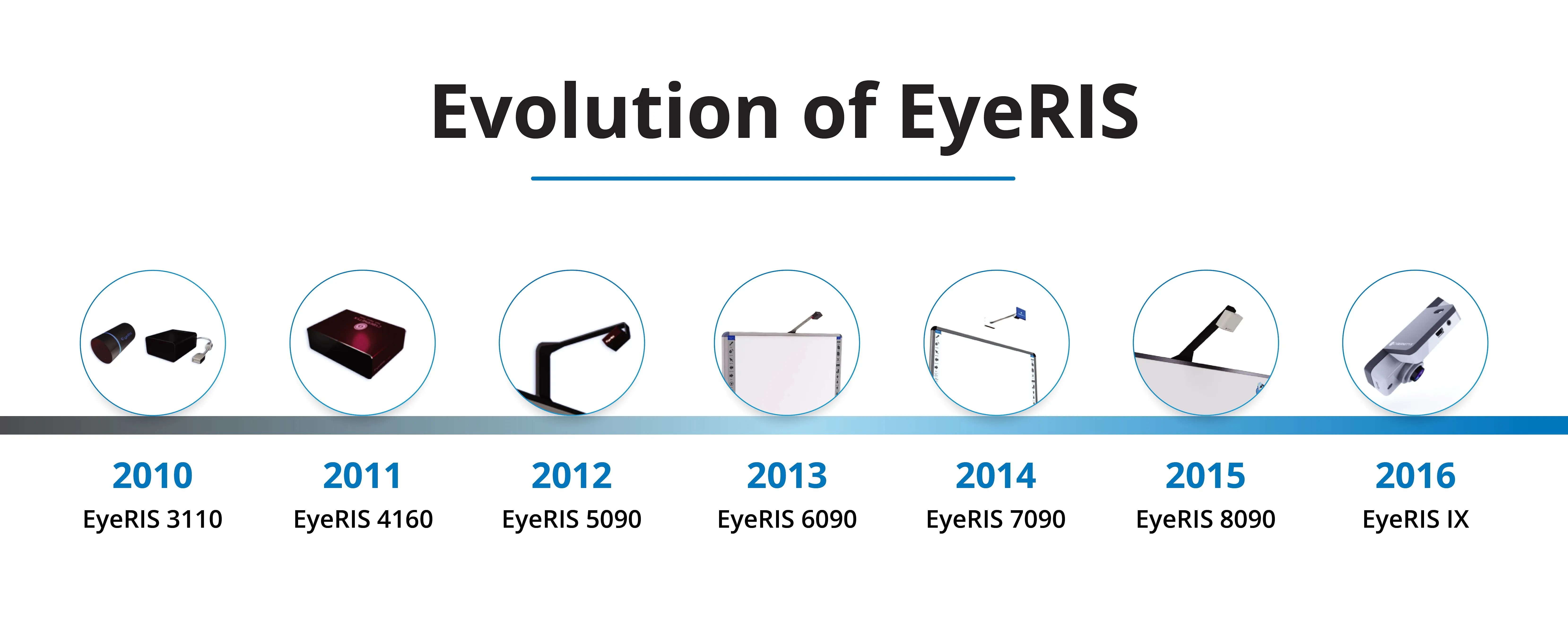

The company now creates novel ways of human computer interaction using intelligent vision. They invented and launched EyeRIS, one of the first intelligent optical touch technology in the world, that brings vision capabilities to computers allowing them to see and understand our world. This enables a direct human interface without the use of any intermediary device like keyboard or a mouse.

Founding team

The initial product

“The first product was a simple camera with no fancy hardware or software, which could simply use machine vision to track and differentiate between the human touch and ambient light over any surface. It was internally referred to as Cyberscreen,” says Nishant.

They built it by using an Omnivision VGA CMOS image sensor and a Vimicro 0345 USB controller to send the data to the computer. The difficult part was the optics, where the unit required a very high aperture lens with low distortion to be able to accurately report the touch gestures and coordinates.

“It later took us about six months to build the first working prototype and about 1.5 years to get the product into mass production. Cybernetyx’s strength comes in part from our early realisation that the camera would become the primary sensor for humans to interact with computers (HCI). The camera is the most versatile of sensing technologies, with the ability to identify shapes, like human hands and fingers, as well as textures, like surface and pen markings and text,” Nishant says.

This realisation marked a revolutionary leap in the market, as sensors like IR LEDs, Electromagnetic sheets and Ultrasound were previously regarded as superior. Today, most global display makers have chosen optical vision as the primary sensing technology for interactive surfaces, and Cybernetyx continues to lead innovation in the field.

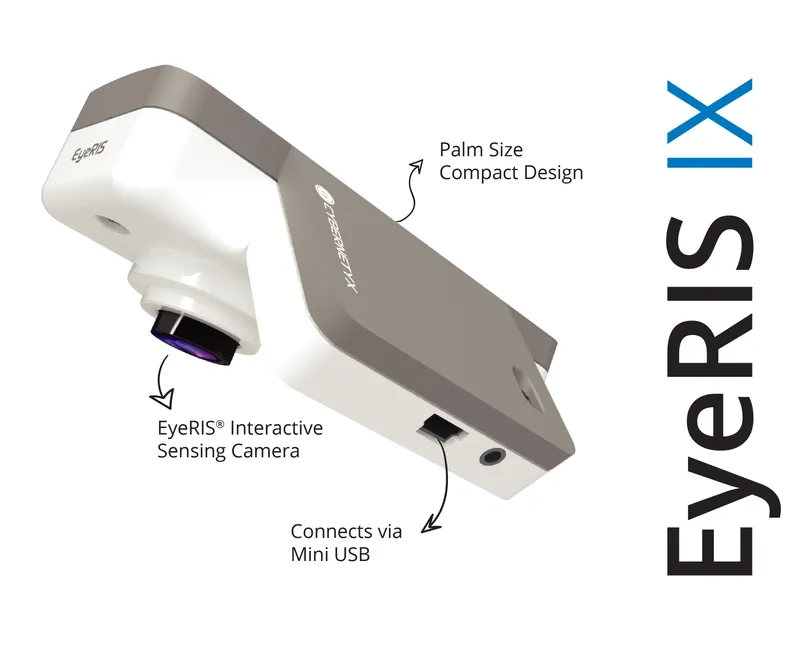

Inspired by the workings of the human eye, EyeRIS (short for an Eye-Like Rapid Imaging System) is a hi-speed camera unit that can plug into any computer and convert its display (such as a monitor, LCD screen, or even a projector) into a multi-touch enabled surface upto a size of 100" — the same as a giant smartphone or tablet.

With its Visual Touch 3D optical tracking platform designed for a natural user interface, it senses finger-touch, stylus touch, multitouch, and gestures on the screen and delivers an intuitive, seamless experience based on natural human input.

Building the Eye out

“The Cybernetyx EyeRIS camera sensor was inspired by human vision, which is responsible for more than 70 percent of the entire sensory input to our brain”, says Nishant.

He adds that computers need to have sensors to be able to interact with the world more intelligently and assist us better.

The vision intelligence algorithms inside EyeRIS cameras track each unique surface for its size and topography. Based on this data, they learn the human dynamics with the surface. This can include, but is not limited to, shape and power of signal, size of surface, curvature, distance, ambient lighting, noise and so on.

When used on a surface for the first time, EyeRIS displays a unique pattern on the surface planarity, which is then captured by the sensor and learnt by the algorithms. EyeRIS then looks for specific type of signals emanating from the devices to learn and differentiate between various touch signals vs noise.

Once EyeRIS has learnt this data, it can use this information to filter noise from human signal at unprecedented speeds of upto 200 scans/sec resulting in latency less than 6ms, making it one of the fastest input devices in the computer industry.

This can allow you to perform computing on any big surface with great freedom. This is the promise of ubiquitous surface computing. Think huge touch-tablets created out of simple flat walls.

Cybernetyx EyeRIS technology already powers millions of the interactive projectors and displays in the world today making it the highest selling portable vision interactive system in the world, reaching over 15 million users across the world and selling in over 80 countries.

As per the latest V4C research, EyeRIS was ranked India’s number one highest-selling Interactive Smart Classroom Product in the last decade, with net sales of 123K+ classrooms (from 2009-2019) with over 22 percent market share.

“In India, Cybernetyx has provided EyeRIS technology for some of the biggest state-level government ICT projects including the prestigious GyanKunj project by Govt. of Gujarat, spanning over 4000 classrooms and a 5051-classroom project in Govt. of Rajasthan, besides several other major deployments with the Governments of Kerala, Delhi, J&K, and West Bengal,” says Nishant.

For the projection use cases of global manufacturing giants, they designed a tiny module called EyeRIS Core that can be embedded inside the projector unit to convert it into an interactive projector.

Changing edtech

“Second major interest we saw coming in was from the edtech vertical. EyeRIS could be attached on an existing dry-erase whiteboard or chalkboard with a simple mount and can be converted into a full-fledged smart interactive whiteboard system with multitouch support. This was a more advanced and elegant solution as compared to the bulky Smart (Interactive) Board solutions available in the market,” Nishant says.

As the products got more acceptance in learning space, the team understood that teachers needed interactive digital tools that can be used on larger surfaces naturally. Keeping the requirements in mind, they developed an integrated smart classroom product under the EyeRIS IntelliSpace brand along with a dedicated multitouch teaching application that gave more tools to teachers to write, draw, annotate and access content directly on the screen.

For the corporate segment, the team launched the Thinker series as a retrofittable visual collaboration tool that help in-house and remote teams to interact and collaborate on a shared canvas. For stand-up meetings, brainstorming and ideation, all hands, and even exhibitions and events, the products helped transform the existing meeting room into a high-productive modern meeting room equipped with the most advanced necessary tools and technology.

“Even though we have both hardware and software components in our product, due to our faster release cycles, we have several major learnings in a short span of time. Also our OEM relationship with major global display companies helped acquire a lot of early feedback over our MVP,” Nishant says.

Classroom deployment

Understanding the usage patterns

The first technology had long-focus lens-based optics and the sensor had to be kept around 10ft away from the screen for touch calibration. While it was simpler to code the image-processing algorithms, it was not very good for the user experience.

The users inadvertently step in-between the sensor and the screen, while using the product, thus breaking the line-of-sight (called ‘occlusion’ or ‘shadow’ problem) and the sensor cannot track the user input anymore thus resulting in broken touch.

The team thus designed a custom ultra-wide angle fisheye optical lens with a 160deg FOV that only had radial distortion (which can be compensated via soft algorithms) to allow the EyeRIS image sensor unit to be placed right above the board or the screen less than a foot away.

“It was extremely hard to reduce distortion in a convoluted image while maintaining accuracy and speed – but it paid off immensely in terms of product UX and success. Not only did this make the product easier to deploy and use, but it also made the problem of occlusion or shadow completely disappear,” Nishant says.

The original sensor prototype was designed for a framerate of 30 FPS at VGA (640x480) image resolution. While this yielded a very usable product experience, there was over 30ms of lag between each continuous touch input signal, which undermines the joy of writing digitally. Users want a pen-on-paper feeling while using interactive devices and they discovered that it needed the response time to be at least below 16ms or preferably even 8ms.

But there was a small caveat – the USB 2.0 technology that was prevalent on the time was only capable to carry a 180Mbps bandwidth, which roughly translates to only around 30FPS of RGB images. Simply put, USB 2.0 cannot support a 60FPS RGB camera without compressing the images in MJPG or other format – which resulted in loss of image quality.

Removing the barriers

“We chose to use a monochrome camera, which only outputs raw BAYER data which can go upto 60FPS on USB 2.0. This brought the latency to under 16ms. We implemented adaptive cropping into our sensors, this adjusted the image output size from the sensors to a smaller window depending on the actual screen size it was tracking by removing all unnecessary area around the display from camera view,” says Nishant.

This boosted the frame rate upto 200FPS and brought the latency down to 6ms, even on a 100” large screen, which still makes EyeRIS the fastest touch technology in the world. Finding and developing it was quite a challenge but eventually this proved to be one of the major USPs for EyeRIS – as nearly every other interactive technology at the time could only support a latency above 30ms, making them slow and unnatural to use.

The team wanted the product to be ready and usable when the user walks and taps on the surface. However, they noticed that sometimes the display would move slightly relative to the sensor due to environmental factors or user behaviour – and that would need the user to calibrate the screen again (touch on several dots on the screen to realign the sensor). This was not ideal for a ready-to-use product.

If the product needs to be always ready, it would have to somehow calibrate itself without human intervention. However, this was not possible because the product could only see IR light which is not emitted in usable amount in natural conditions. Therefore, it needed to see in the visible band (480nm-700nm) and IR band (around 850nm in our case) of light at the same time, which was not obviously possible.

“To make it work, we created a small solenoid-based moving filter tray that is installed in front of the sensor and via a simple command from the computer application, which can be switched to visible or IR mode automatically,” Nishant says.

The focus on the device

Using this they could calibrate the unit automatically if it moved by switching to the Visible spectrum mode and showing a particular pattern on the screen to indicate the coordinates to the camera in under 5s and moving back to IR mode.

This created some refraction error following the Snell’s law between the coordinates of the two modes, but we were able to solve that accurately in our software via training and compensation factor, Nishant adds.

This work took quite a bit of investment and effort on both the hardware and the software side, but EyeRIS became the first self-calibrated touch device in the world that doesn’t require need any pre-calibration from the factory stage to work. Users can simply install it in any scenario, in any country across the globe and EyeRIS will learn and find a way to calibrate itself and work.

“While our expertise is in image processing and computer vision interface, when we launched our education product range, it was very clear to us that to succeed in this space, it is very important to solve for content besides just interactivity. A teacher may have the best digital interactive whiteboard in the classroom, but without the right instruction tools and multimedia content, it would just sit there catching dust most of the time,” Nishant says.

The team developed MyCloud, a multimedia content search engine, and bundled it free along with our interactive software to allow teachers to find and demonstrate content from the internet in the classrooms and impact the learning experience of the students.

“For our core HCI space, we are now developing mid-air touch solutions for touchless interaction, as people do not feel comfortable using touchscreens in public spaces such as airports and malls. Also, further developing our VR/AR input device portfolio, we are working on Alfred, which is our brain-computer interface module that has only six electrodes and can be embedded inside a VR headset or a headband – and can take commands from human thought to control basic computer operations,” Nishant says.

Edited by Anju Narayanan

![[Product Roadmap] Building an interactive display solution, how Cybernetyx touched 15M users across 80 countries](https://images.yourstory.com/cs/2/a9efa9c02dd911e9adc52d913c55075e/PRM-01-1606227549847.png?mode=crop&crop=faces&ar=2%3A1&format=auto&w=1920&q=75)

![[Product Roadmap] How upGrad followed a three-phased approach to close to a million individuals globally](https://images.yourstory.com/cs/2/a9efa9c02dd911e9adc52d913c55075e/PRM-1605618954050.png?fm=png&auto=format&h=100&w=100&crop=entropy&fit=crop)

![[Product Roadmap] How dating app Aisle attracted over 3.4 million users across 193 countries](https://images.yourstory.com/cs/2/a9efa9c02dd911e9adc52d913c55075e/PRM-1605021665589.png?fm=png&auto=format&h=100&w=100&crop=entropy&fit=crop)

![[Product Roadmap] How bitcoin startup Unocoin dealt with legal trouble and garnered 1.3M users](https://images.yourstory.com/cs/2/a9efa9c02dd911e9adc52d913c55075e/rmp-1604386390693.png?fm=png&auto=format&h=100&w=100&crop=entropy&fit=crop)

![[Product Roadmap] How BharatPe built a robust, easy-to-use tech product for 50 million SMEs](https://images.yourstory.com/cs/2/a9efa9c02dd911e9adc52d913c55075e/ashneer-grover-1603796096494.png?fm=png&auto=format&h=100&w=100&crop=entropy&fit=crop)

![[Product Roadmap] From payments gateway to a full-stack financial services product: Razorpay's journey](https://images.yourstory.com/cs/2/a9efa9c02dd911e9adc52d913c55075e/PRM-1-1603203363948.png?fm=png&auto=format&h=100&w=100&crop=entropy&fit=crop)