Can an AI chatbot really be your mental health ally?

Artificial intelligence offers innovative solutions to address the challenges of accessibility, affordability, and shortage of trained professionals in mental healthcare. But can AI-driven tools really bridge the gap? What are the ethical concerns this raises?

Trigger warning: Story mentions suicide

Arnav*, a product manager from Bengaluru, sought therapy after he broke up with his girlfriend and soon realised that a one-hour online session with a counsellor every week was not enough to pour out all his feelings.

His ex-girlfriend sending him ginormous text messages didn’t help either. Feeling overwhelmed, he turned to ChatGPT for help.

Arnav posted one such message on ChatGPT and asked the AI chatbot to don the role of a relationship counsellor and interpret it using psychological frameworks.

“I prompted it to draft a good reply, keeping in mind the other person's needs,” says Arnav.

This casual attempt unblocked the floodgates for Arnav.

“Since then, I have used ChatGPT for everything—from writing a breakup message to putting my point forward to an irritating colleague respectfully. I think I have a direct and confrontational approach to communication that doesn’t go well with most people. ChatGPT has helped me in this regard,” he says.

Arnav isn’t alone.

*Parth, a communications professional in Mumbai, too turned to ChatGPT after learning how it had assisted others when traditional doctors couldn’t.

Contrary to what one may think, Parth was not averse to the idea of opening up to an AI chatbot about his struggles with depression and smoking addiction. He asked for guidance on how to overcome his struggles and lead a healthier and more balanced life.

The platform suggested a smoking cessation plan, focusing on mental and emotional health, forming healthy habits, building a support system, and, more importantly, seeking help.

This plan, Parth says, has helped him deal with his feelings to a large extent.

*Rajani, a media professional from Bengaluru, too has used several AI platforms to deal with grief and other overwhelming emotions. Besides ChatGPT, she has used 7Cups, an AI chatbot for anxiety; August AI, a chatbot that answers queries on health on WhatsApp; and Wysa, an AI platform for mental health.

Indu Harikumar*, an artist from Delhi, uses HeadSpace, a mental health app frequently, and has enrolled in several courses offered by the app to help her deal with anger and understand patience and compassion.

Arnav, Parth, Indu and Rajani are among the steadily growing tribe of people who are turning to AI tools—like chatbots and virtual therapists—for answers to what they are feeling and ways to deal with their feelings and emotions.

The medium is easy to use, convenient, accessible, affordable, offers quick answers, and respects the anonymity of users. But can it replace the sensitivity, empathy and knowledge that trained and experienced professionals can offer? Also, how safe and reliable are AI tools while addressing issues related to mental health and well-being?

Social Story does a deep dive.

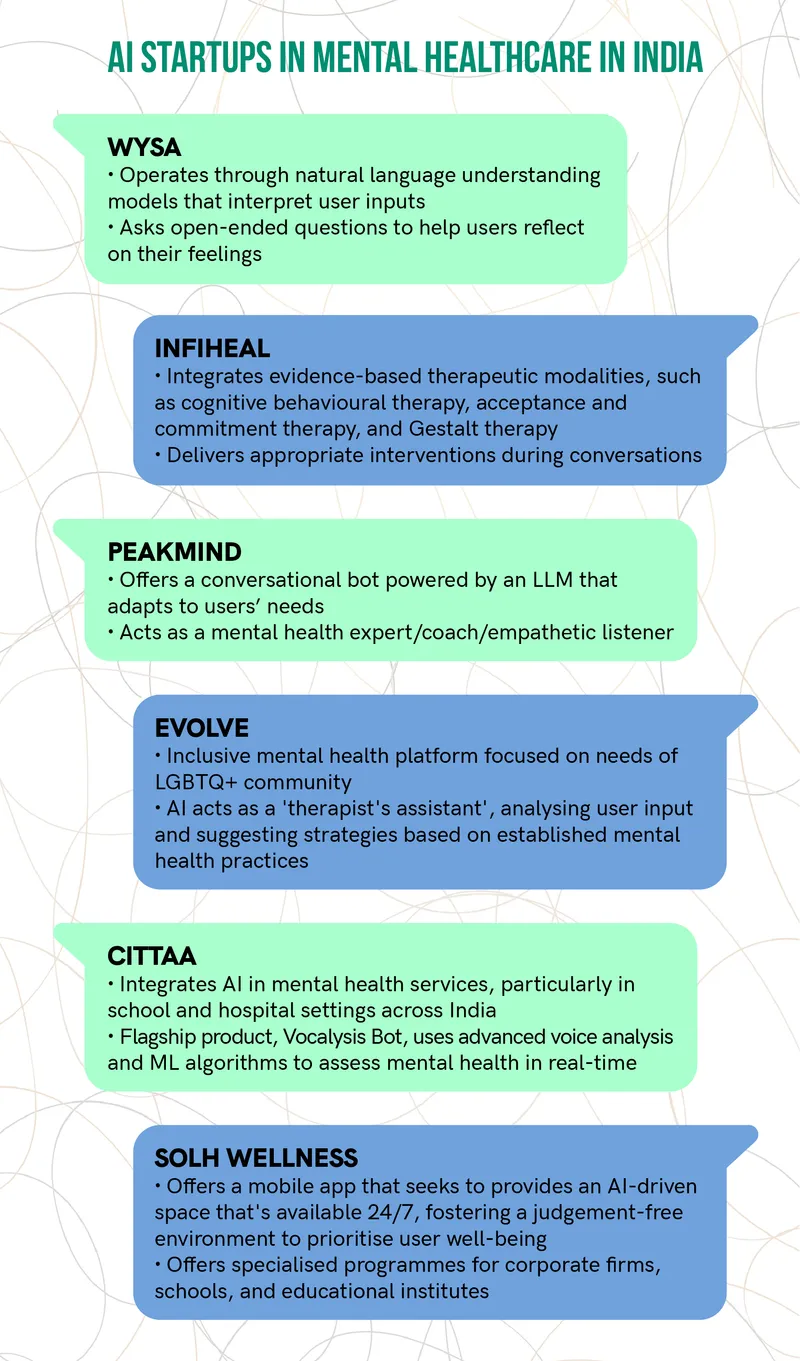

Mental health AI startups

Infographic: Nihar Apte

According to a World Health Organization (WHO) study, AI has the potential to enhance mental health services through tools that can assist with diagnosis, treatment recommendations, and monitoring.

Buoyed by the potential of AI, a clutch of startups has emerged in the mental health space in India, offering pioneering solutions and tools to address mental health concerns.

Wysa is an AI platform for mental health founded in 2016 by Jo Aggarwal and Ramakant Vempati.

Stress and burnout had taken its toll on Agarwal, who started to suffer from depression. Meanwhile, the couple were caring for Ramakant’s elderly father who was bipolar.

Their first-hand experience of the impact of mental health drove them to develop a machine learning (ML) platform to analyse existing data points available on mobile phones—such as calling patterns, screen time and sleep time—to put together a three-dimensional view of a person’s mental health.

The Wysa app was subsequently created using evidence-based cognitive behavioural therapy exercises alongside a library of interactive self-help content and an emotionally intelligent AI-chatbot designed to take engagement to the next level.

Vempati shares that, within five months of its launch, the founders received an email from a 13-year-old girl who said that Wysa was the only thing that was helping her after she attempted to take her own life.

“We’ve since received more than 180 personal messages from people who claim the app has saved their lives,” he says.

Infiheal is another mental health startup that offers Healo, a chatbot positioned as an advanced AI co-therapist. It operates using a combination of natural language processing (NLP), ML, and retrieval augmented generation techniques.

“Users typically interact with our AI system through anonymous, text-based conversations, where the platform provides guidance and support based on their mental health needs,” says Srishti Srivastava, Founder, Infiheal.

The scarcity of queer-affirmative therapists and heteronormative digital health platforms led Anshul Kamath and Rohan Arora to start Evolve, an inclusive mental health platform focused on the needs of the LGBTQ+ community.

Evolve uses NLP and ML to understand user needs and provides support by acting as a ‘therapist’s assistant’, analysing user input and suggesting strategies based on established mental health practices.

“It takes into account the user’s mood that is selected and inputted by the user, and then accordingly it provides content suggestions” says Kamath.

Anjali*, who was grappling with the complexities of her sexuality, got started on a programme on Evolve called ‘Explore Your Identity’ that provides psychoeducation and helps users introspect with techniques such as cognitive behavioural therapy and mindfulness.

She says it helped her understand that her attraction to women was perfectly normal and also connected her with others who were going through similar experiences.

Started in 2020 by four IITian friends—Neeraj Kumar, Manish Chowdhary, Sandeep Gautam, and Parth Sharma—PeakMind is a mental health AI platform focused on students. It features Peakoo, a conversational bot powered by a large language model that adapts to the users’ needs as a mental health expert, coach or an empathetic listener.

“Our ML driven technology monitors user behaviour to identify distress and self-harm suicidal signals for timely detection and proactive support,” says Kumar.

PeakMind has 1.2 lakh users and works with schools, colleges, and coaching centres.

Addressing ethical concerns

People are increasingly turning to AI tools—like chatbots and virtual therapists—for answers to what they are feeling and ways to deal with their feelings and emotions.

The emergence of several AI tools and platforms naturally raises concerns and questions on the efficacy of their solutions.

Can AI tools address complex issues related to mental health and well-being in a safe and responsible manner? How do mental health platforms ensure that AI doesn’t offer inappropriate advice or handle complex mental health issues irresponsibly?

“All the content and guidance Healo provides are curated and vetted by psychologists, psychiatrists, and mental health professionals. This ensures that the information delivered is both authentic and safe for users, minimising any risk of harm,” says Srivastava of Infiheal, which offers the Healo chatbot.

For complex mental health issues, Healo has been trained to avoid offering diagnostic assessments to users, she adds.

Wysa’s platform strives to mitigate ethical concerns by using a clinician-approved rule engine for its AI responses. The platform is also equipped with crisis-detection mechanisms, which urge users in immediate danger to seek human support, thus ensuring complex issues are handled responsibly, say the startup’s founders.

Wysa also claims to have safety guardrails to ensure user data is stored safely. The app is at its 889th version and it continues to evolve, says Smriti Joshi, Chief Psychologist, Wysa.

Both Wysa and Infiheal follow a ‘hybrid’ approach that transitions from AI-driven interactions to human therapists whenever the need arises.

There is a huge shortage of mental healthcare professionals in India, with only 0.3 psychiatrists, 0.07 psychologists, and 0.07 social workers per 100,000 people.

Given this dire shortage, Dr Neerja Aggarwal, Co-founder, Emoneeds, a startup offering online psychiatry counselling and therapy, believes AI serves as a vital supplement, particularly for initial consultations, making it easier to assess patient need and offer immediate support.

However, there are key elements of human-led therapy that AI cannot replicate, she says. These include emotional intelligence, empathy, and the ability to navigate complex interpersonal dynamics.

“Human therapists excel in understanding nuanced emotional cues and providing a comforting presence that fosters trust and rapport,” she says.

Vempati says Wysa’s AI uses over 100 NLU (natural language understanding) models to interpret user inputs and direct them to appropriate therapeutic pathways, thus ensuring that the responses are relevant and grounded in clinical safety.

When Reenu* was struggling with depression and even had suicidal thoughts, she decided to seek suggestions from ChatGPT. She says the chatbot urged her to reach out to someone who could help her—like a mental health professional or a person she trusted.

It also helped her with suggestions on ‘being patient’, ‘setting small goals’, ‘staying connected’, and ‘talking to someone’ and also advocated therapy.

“What stood out for me was this: the chatbot said we could talk about what I was going through, explore coping strategies, and discuss ways to find hope and resilience. While it cannot replace professional help, it said it was there to offer understanding and encouragement,” says Reenu.

In the case of Reenu, the ‘right’ prompts may have elicited suitable responses on ChatGPT, but this is not always the case.

Though young people are using various platforms such as Discord, Instagram and ChatGPT to explore solutions for their mental wellbeing, this may not always be a safe option, says Joshi.

She points out that there have been cases where ChatGPT offered a response which was “clinically unsafe”.

“You can use it for practical solutions for communication issues or to suggest a grounding exercise… It can give you answers that may be factually correct, but not contextually correct,” she says.

“What’s important is that you use the right terminology. But not everyone is adept at this or has the psychological awareness about what they are going through and what they need,” says Joshi.

Despite the risks associated with tools like ChatGPT, Arnav says he will continue to use the AI chatbot along with regular therapy. He says spending a significant amount of time studying psychology has helped him become an advanced “prompter”—he says he is able to steer ChatGPT in the desired direction and ensure it does not get erratic.

However, not everyone is skilled at this, and this is a risk one has to be wary of while using AI tools for therapy.

And not everyone has had favourable experiences like Arnav, Parth and Rajani. Some have had sub-optimal experiences on mental health AI platforms and do not count on AI chatbots as their companions.

Sanjana*, a media expert based in Noida, who was going through a rough patch, turned to a couple of AI platforms and found the responses very “templated” and lacking in empathy.

“I found some sort of bias. If I said I was feeling sad, it would say I had depression, which was not the case. In regular therapy, there is a human element involved,” she says.

Going forward

AI startups in mental healthcare are looking at expanding AI’s language capabilities to offer support in more languages and ensuring accessibility in more areas, beyond urban centres.

With the evolution of AI, the use of this technology in the mental healthcare space is likely to increase. While the hybrid approach will continue, AI is also expected to augment professional support by understanding and analysing data and behaviour patterns.

“AI will be able to supplement professional support by improving compliance, providing suitable nudges, analysing cues from natural behaviour, and combining data from various sources to help the professional significantly. Given the significant shortage of professionals, using AI seems truly intelligent,” says Neeraj Kumar of PeakMind.

AI startups in mental healthcare are looking to capitalise on the opportunities in the space. This includes expanding AI’s language capabilities to offer support in more languages and ensuring accessibility in more areas, beyond urban centres.

For instance, Wysa is integrating its AI platform with broader rural healthcare initiatives, including telemedicine platforms, mobile health clinics. and telemedicine.

In rural areas where there is a shortage of mental health professionals, Wysa’s hybrid ‘human-in-the-loop’ approach aims to address immediate mental health needs and also build local capacity for mental health support in underserved regions. AI provides initial screening, emotional support, and continuous monitoring, while human intervention is available whenever necessary.

This approach strives to empower community health workers with AI-driven tools. Trained in using AI technology, these community champions become essential bridges in delivering care, ensuring that even in resource-limited settings, mental health support is proactive and personalised, says Wysa’s Joshi.

(*Some names have been changed to protect identity.)

(If you or someone close to you is facing mental health issues, you can contact the national 24x7 toll-free Mental Health Rehabilitation Helpline KIRAN at 1800-599-0019).

Edited by Swetha Kannan