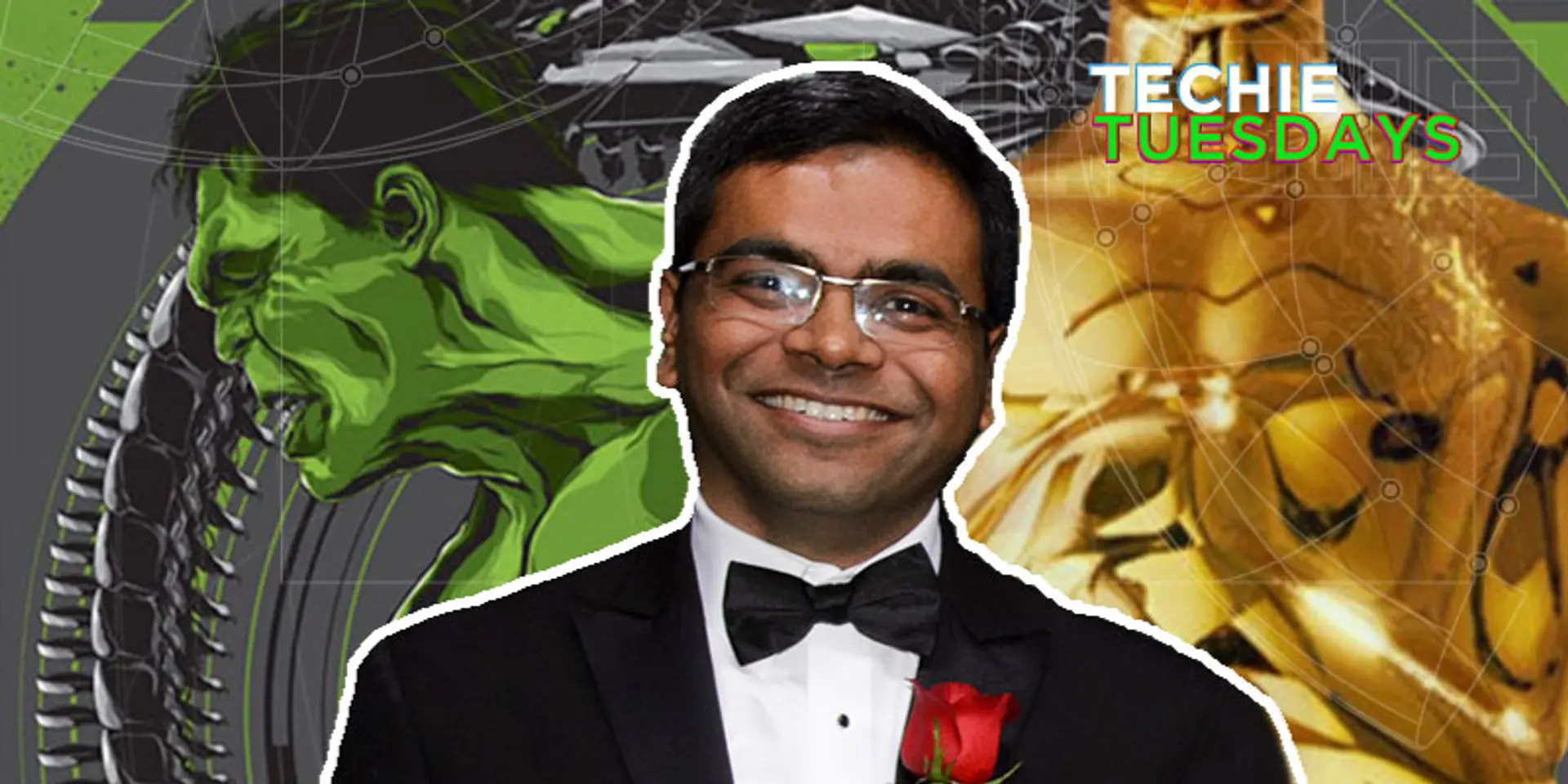

Meet Kiran Bhat—the man who engineered Hulk and Tarkin to win 2017 sci-tech Oscar

Our protagonist for this week’s Techie Tuesdays is Kiran Bhat, who won the 2017 Oscar for technical achievement. The technology which he developed at IL&M has been used in movies like Avengers (for Hulk), Pirates of the Caribbean (for Davy Jones), Teenage Mutant Ninja Turtles, Warcraft, Star Wars: Episode VII, and Rogue One: A Star Wars Story. With his startup Loom.ai, Kiran is on a mission to build the best 3D representation for every face on the planet.

Simply put, Kiran Bhat is the brain behind the technology which made it possible to make the facial expressions of Hulk (The Avengers) and Tarkin (in Rogue One) look real. Though, it took him (and his team) seven years to build the technology, the results were as sweet as anyone would imagine.

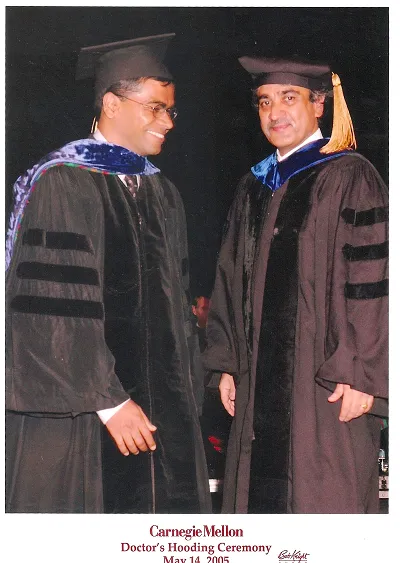

Kiran won the Oscar for technical achievement in 2017 (Sci-Tech Academy Awards) for developing the facial performance-capture system along with his team at IL&M. He holdsa degree in electrical and electronics, and mechanical engineering from BITS Pilani, and a PhD in Robotics from Carnegie Mellon University.

A tech entrepreneur with a decade of VFX experience in building computer vision algorithms for synthesising photo-realistic CGI characters for movies and computer games, Kiran is the chosen one for this week's Techie Tuesdays.

YourStory spoke with Kiran to capture his journey from Coimbatore to winning an Oscar.

The engineer in the house of a rocket scientist

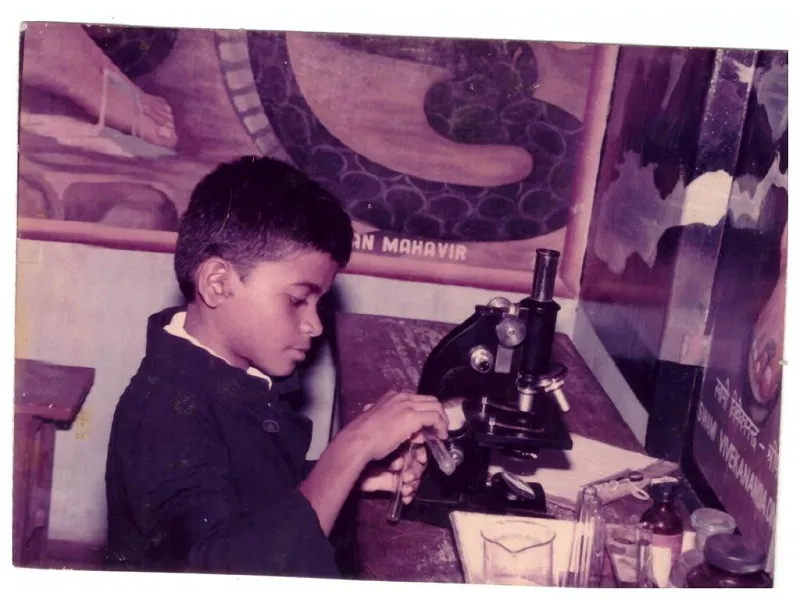

Kiran was born and brought up in Thiruvananthapuram. His father was a rocket scientist in ISRO, who later decided to move to Coimbatore to start his own company. Kiran recalls being an academically oriented kid who got exposed to programming early on. He was exposed to lateral thinking for the first time when he moved to a Coimbatore (after class seventh) school.

Kiran loved maths, physics, and chemistry. He could speak Malayalam, Thulu and Tamil. He even learnt French in class 11 and 12. Kiran came first in the district in class 12 board examinations.

He recalls his high school days, “I was blown away by the movies The Abyss and The Terminator. Looking at the computer-generated characters, I wondered what will it take to build such a thing.”

Kiran’s choice to go to BITS Pilani after class 12 was somewhat contentious in his family as he had secured admissions in many other nearby universities (Chennai, Trichy, and other southern cities) as well.

Related read - Story of Anima Anandkumar, the machine learning guru powering Amazon AI

BITS Pilani days

BITS Pilani had people from different geographies and it felt more than just an academic institute. Kiran says,

You could find your corner in the world and do what you wanted to do. There was no rat race of achieving just one goal. What made BITS special was that a lot of people came to the college with similar drive and aspirations. I've always believed that the best places to study are the ones where you can learn from others.

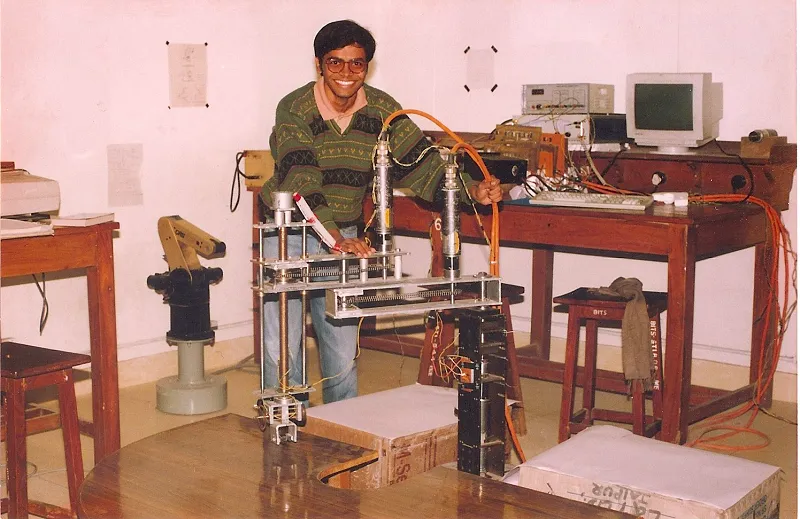

Since Kiran was interested in continuous math, calculus, and mechanics, he chose mechanical engineering (which had all three components). Because of his interest in robotics, which required him to learn control theory at the end of his first year, Kiran opted for electrical and electronics engineering (in addition to mechanical engineering). He adds, “It made a complete package as I could build the whole system and drive it (and give it a brain).”

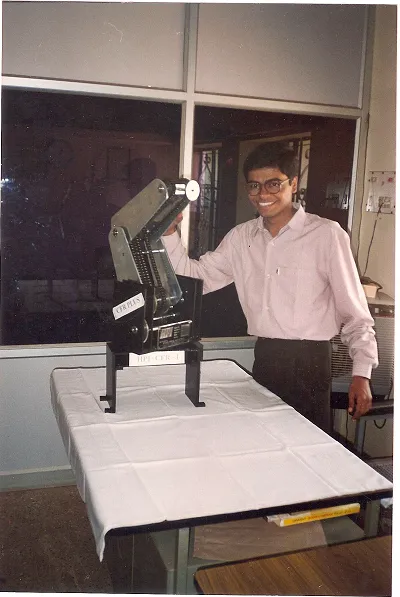

He started tinkering with robots as a hobby but soon it became an obsession. In his second year, Kiran built PD controller. He says, “We had a robot arm in the lab. I was trying to get it to move in space. The control algorithm which allowed that, was written with fuzzy logic.”

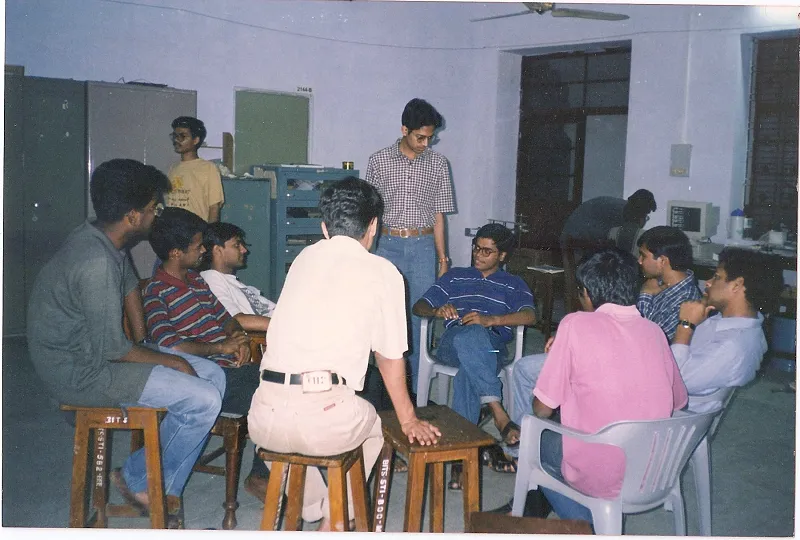

Kiran was surrounded by some great minds in the robotics lab. He shares the story of Sartaj Singh, a hardware guru, who once hijacked a fully functional robot (bought for the institute) in the lab, removed its motors, and then put his own motors (and wrote his own controllers). He did all this just because the robot had a lousy operating system and a control box which was frustrating to program. Kiran recalls, “There were a bunch of guys like him who were not shy or afraid of anything. They convinced the professors to set up collaboration with Motorola so we had all these microcontroller chips, evaluations models coming in.”

Kiran and others in the robotics lab represented a diverse combination of people with interest in control theory, building mechanisms, DSPs, and fabrication. Every one fed off and challenged each other in a creative way. They spent a lot of time in the lab and almost started living there.

The robots and the mentors

Some of the robots Kiran worked on included the following:

- Scara: It was the very first version of the robot arm designed fully in-house. The students mathematically modelled every single detail and later presented a paper which described how to build a robot. In the pre-Google era, students spent hours in library going through the IEEE magazines on robotics looking for solutions. Kiran says, “The experience of building Scara gave us an intuition about what not to design.”

- Hydra: It could do all-terrain locomotion. Kiran’s adviser, Prof IJ Nagrath, also pitched in when the team got stuck at a point. The mathematical model of how the wheel interacted with the ground was extremely non-linear and the team had to figure out a way to take the non-linear dynamics and implement in the computer. Hydra had a double-gear mechanism in its wheels and there was no way to cut the gear in that set-up. The team finally built it in aluminum and welded in a set-up in Coimbatore that allowed them to put things together without warping it.

- Quadruped: This was Kiran’s undergrad thesis. He built the first prototype with an idea that most of the work was done fully by a mechanism and then introducing automatic controls in a few places.

- Hexabot: Kiran went to the Centre for Artificial Intelligence and Robotics (CAIR) Bengaluru and built a hexabot. He had two papers from this project which came handy for his admission to higher studies.

Kiran attributes much of his learning at BITS Pilani to his advisers Prof IJ Nagrath and Prof RK Mittal who also started the Centre for Robotics in the college. Kiran recalls Prof Nagrath, author of one of the most seminal books in control theory, saying,

You want to do something? Go ahead and show it to me. Don't talk about it.

Looking back, Kiran believes that the five years (for the dual degree) at Pilani gave him enough time to sink his teeth into something substantial.

Eventually, he graduated with 9 CGPA and enrolled for PhD at CMU in 1998. Choosing the college was a no-brainer given the fact that it was the best university in robotics then.

Also read – The untold story of Alan Cooper, the father of Visual Basic

Robotics at CMU

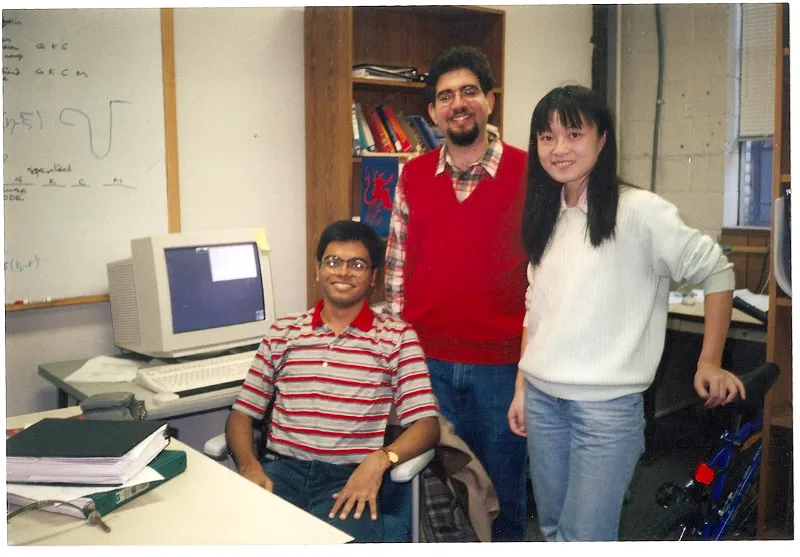

Kiran felt that CMU was like BITS Pilani except that it was much smaller. His class had 16 students from different academic (AI, perceptive computing, hardware) and geographical background (Chile, Mexico, Europe, China, India, Canada, and the US). Since the robotics students tend to attend most of the computer science (CS) classes and a few dedicated robotics classes (like control theory), Kiran ended up interacting with a lot of CS students.

A lot of research work going then (1998-2004) formed the basis of deep learning and AI revolution of today. At that time, they were considered to be esoteric research topics.

In the first two years at CMU, Kiran was a part of two all-terrain vehicles (ATV) robotics team. The vehicles would roam around the grounds of CMU and communicate with each other. Kiran was working on the perception aspect of the ATVs, ie how to identify the sidewalks and roads. He says, “Pittsburgh has crazy weather and it snows a lot. Snow with slush becomes grey snow. The robot has to see the environment and make sense of the world.”

Kiran had three advisers in CMU. His mentor was Pradeep Khosla (now the Chancellor of UC San Diego). The two advisers included Prof Steven Seitz (now a faculty at the University of Washington) and Prof Jessica K. Hodgins.

Related read – Meet the co-creator of Julia programming language, Viral Shah

IL&M: the internship

At the end of his second year, Kiran came to IL&M for an internship. This dramatically changed his perspective on computer vision. Till now his work was more AI based in computer vision but here it was focused on graphics. I got a chance to work on data from the actual cameras.

He had to figure out how to solve for a camera motion in a real video sequence. He says, “For example, in a Transformers movie where Michael Bay is blowing shit up on the screen, how will you put the CGI robots into the (film) plate?”

Most of the parts you see in these movies are CGI layers. For all this to work, the most basic thing is to know how the camera was moving in real world relative to the objects in the scene and what it was doing optically. Kiran tells that one can't put too many sensors on the camera in real-world situations because the directors hate to put specialised motion capture set-up, or an optical encoder on the camera.

Luckily mathematics is there to help. Kiran explains,

If you have a video sequence and you ask a question—what's the movement of the camera that resulted in this video you're seeing? That turns out to be a standard computer vision problem called structure from motion and camera motion reconstruction. There are many ways of solving it. The more complex the sequence is, the harder it is to automate it.

Kiran suggested to solve this with a program (algorithm) that will analyse the situation. It will still need the artists because the computer algorithms are fragile when there are complex changes in the environment. An explosion can make all computer vision algorithms useless. Most of these algorithms make some assumption about visual continuity in the scene and pixels change colour dramatically on an explosion. Similarly, motion blur, smoke, and all the things that are used to make something look interesting cinematically, throw off computer algorithms. Fully automated algorithms won’t work in such cases.

When Kiran joined IL&M, they were working on episode 2 of Star Wars and trying to make Yoda's cape look real. They were struggling with the simulation of the cloth. There were many problems where the IL&M team wanted to mimic nature but didn't know how to do it.

Kiran suggested,

We just have to look at the videos of real cloth and measure perceptually how real cloth moves and get a program to analyse it and match it to the computer simulation (to make it look like real). It's called an inverse problem where we (computer vision people) look at the video and figure out a model which could have caused that video.

You may also like – Sandipan Chattopadhyay — the statistician behind the 160x growth of Justdial

Back to CMU

When Kiran went back to CMU after his internship, he knew what he wanted to work on. His guide at IL&M was made the external committee member for his PhD.

Talking to friends at IL&M, Kiran knew the most difficult challenges that VFX industry faced at that point of time. Everything lined up nicely in terms of what he wanted to do personally.

His research was on how to make numerical simulation match reality (specifically cashed on dynamic objects like cloth, fluids, rigid bodies). Numerical simulations are partial differential equation (PDEs) which are very sensitive to initial conditions. To make these numerical systems behave well, people add damping into the system. But it doesn't feel real. He explains,

It's a hybrid problem because you don't have a mathematical model that's accurate enough and the reality is super complex.

His thesis turned out to be a group of algorithms that made CGI simulations look real.

He also met his wife Payal during those four years (2000-2004).

Experimenting at Epson

Though Kiran could have gone to IL&M at any point of time, he wanted to try something different and joined Epson. One of his flatmates, Anoop Bhattacharjya (he was at computer graphics group at CMU) was recruiting eclectic computer vision researchers to work on a projector camera. He was auto calibrating very large displays. It was still graphics and vision but not VFX. Kiran spent more than a year there but started realising that his heart wasn't in that. He then decided to join IL&M.

IL&M: The Hulk, Davy Jones, and more

Kiran had always found IL&M an inspiring place to be in. He says, “Having someone like Dennis Muren who has won six Academy Awards as one of the colleagues, is great.”

When he rejoined IL&M, he was happy to notice that four years after his work, they were still using the same technology. It was much more powerful and user-friendly but the core (mathematical optimisation algorithm) was same. Kiran defines the state of VFX industry and computer vision at that time,

The complexity that VFX world was trying to create was to match nature with algorithms. Unlike robotics where everything has to be automatic (unless you're building a Mars rover), in VFX the emphasis was less on fully automatic algorithms and more on algorithms where you can attempt to solve much harder visual problems with a human in the loop to help algorithm.

Kiran joined the computer vision team at IL&M and worked on vision, perception, and machine learning. Initially, he was doing more of simulation, character rigging (as a programmer and not as an artist), and building tools that artists could use. He would pick the problem that people can't solve and mathematically formulate it (and write a program for it). Around that time IL&M was working on the character of Davy Jones in the movie Pirates of the Caribbean. John Knoll, the supervisor of the project came and challenged R&D group to simplify the work by automating some of it with mathematical programs.

Kiran explains, “In real life, the actor was wearing makeup of the character to give a reference to the animators so that they could match the body movements (of the actor) with the CGI characters.” They were doing it all by hand 24 frames per second. It's a laborious process and not scalable at all. That's how Kiran got involved and after seven years of work he helped to put together Rogue One (Star Wars).

Kiran headed the technology in solving some of the problems related to using computer vision for the VFX supervisor of Matrix, Kim Libreri (who joined Lucasfilm then). He had to eliminate all the different variables that could go wrong.

Also read – Meet Vijaya Kumar Ivaturi, the ex-CTO of Wipro, who’s ‘not business as usual’

Baby steps to ‘Hulk’ steps

In the beginning, Kiran and his team started with pilots like face replacement (automated using algorithms) for a few minutes in a movie. They then worked on games on Star Wars and Ninja Turtles among others. He recalls one of his biggest challenges,

Animators thought of characters as creative things and were not willing to believe that a computer could do that. We had to change this mindset. The technology is faithfully reproducing what the actors acted in an editable format.

Mark Ruffalo (who played the character of Hulk) was very happy with this technology. Duncan Jones was impressed by the work of IL&M with the Hulk and proposed to make his next movie specifically based on actors' acting (and use technology to automate the entire animation).

But the biggest among all was when Disney decided to recreate a digital version of Rogue One. Kiran recalls, “It was a risky and a bold decision because it could have destroyed the credibility among the entire fan base (if it went wrong).”

But it paid off and the technology formed the front and centre of the movie. While working on the movie, IL&M team realised that Guy Henry's mouth would never match Peter Cushing's lips (very thin). It was difficult to mimic and animators had to do additionally patch up.

Finally, in 2017, Kiran and his team won the Academy Award for making CGI faces that look and feel like real human faces. He says, “The trick is same. You look at real people, analyse their faces with a program, and make the computer version do the same.”

Kiran feels that in the last decade, there's a tremendous explosion of ideas fueled by VFX. Characters like Davy Jones (Pirates of the Caribbean), Gollum (Lord of the Rings); scenes with explosions, crashing, waves and everything one can imagine doingwith sci-fi, have been put on the big screen. The advancement in computer graphics hardware has pushed movie industry further.

Turning entrepreneur with Loom.ai

During his time at IL&M, Kiran was in regular contact with his BITS Pilani batchmate Mahesh Ramasubramanian who was working with DreamWorks Animation(via Cornell University). Mahesh had worked on the VFX of Madagascar, Monster vs. Aliens and many other movies by DreamWorks Animation. Kiran and Mahesh came from different worlds (though same industry). Kiran explains,

DreamWorks create an entire world and then put characters in it and Lucasfilm(IL&M) is the exact opposite. They take CGI characters and put them in the real world.

Kiran and Mahesh helped to introduce incredible characters like Hulk and Shrek to the world. He realised that the magic of the big screen is missing in the outside the world. The duo then came up with the idea of building characters (avatars based on faces of people) on a smartphone without using the machinery, hardware, and rendering.

They had to solve two problems:

- You can't put a human in the loop. The tech (process) has to be fully automatic.

- You can't have extra hardware. You've to work with whatever every smartphone has—a camera. The algorithms have to work on data from the camera (an image or the video).

A lot of computing happens on the cloud which allowed to do very sophisticated things.

Both quit their jobs and started Loom.ai in March 2016. Loom.ai has raised $1.35 million funding and is a team of eightpeople currently. Kiran says,

We have taken it on ourselves to basically build the best 3D representation for every face on the planet. Our dream is to digitise every face. Anyone who has a smartphone should be able to create their digital representation. This could be your new digital identity.

Loom.ai is using learning algorithms to automate the bits of technology that wasn't possible in the past. The technology at Loom.ai is a marriage of artificial intelligence and visual effects. According to Kiran, early adopters of the Loom.ai technology will be messaging apps, emoji, AR apps, games—basically any place where you can see yourself in a 3D experience. Midterm use cases could be something like theme parks where one can experience a ride, whereas long-term application is telepresence and e-commerce (people can talk and shop in avatars).

The biggest challenge at Loom.ai is scale and automation.

Kiran gives an indication that Loom.ai avatars can be seen soon in few apps. The startup is working with some companies who're to deploy this technology. Summing up his startup experience, Kiran says,

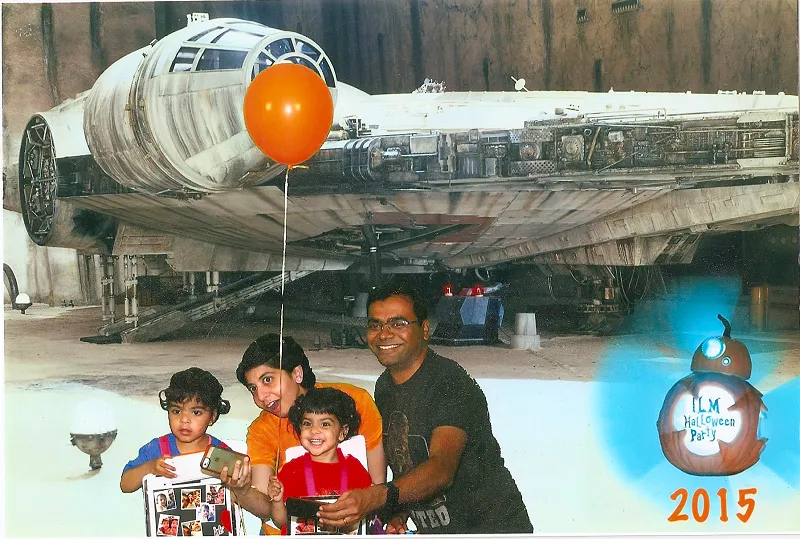

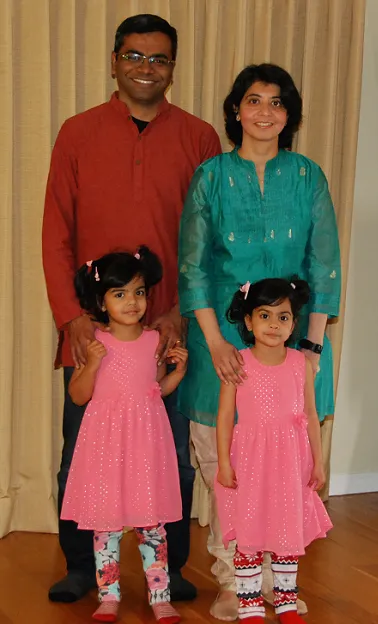

Starting a company is like having a child. I've twins so I know. It's surprisingly satisfying to start something of your own. It's challenging as hell most of the times but it's unlike anything I would have done at Lucasfilm (IL&M). It tests you in a way that you've never been tested before. There's no parachute. You're in a free fall.

Kiran feels that the team at Loom.ai is very similar to the composition of teams he would have had at IL&M. He says, "If it works out, then more people would have used Loom.ai than any of the projects we've worked on ever."

Related read - Meet Mitesh Agarwal—the ‘brain’ of BITS who’s heading technology at Oracle India

The overarching theme

Going to BITS Pilani, doing robotics; going to Carnegie Mellon University and doing computer graphics; going to ILM to work on computer vision; and now Kiran is taking it to the next level at Loom.ai. The overarching theme that keeps him excited after spending so much time in technology is figuring out a way to mimic movement in nature and representing that in a computer.

As you're looking at different things you want to do and figuring out how to tackle something, it's helpful to look into something that draws you more than others. This has guided me through a lot things in my life,

says Kiran.

Kiran has a lot of conviction in whatever he does. He adds, “I knew at the bottom of my heart that with the right computer algorithm, you can do much better than just trying to do this by hand (animation). Some of the legends and biggest brains of the VFX industry had a similar opinion and believed in it.This also helped me a lot.”

For now, he’s just looking to find a balance between the startup and his-family life.It would be extremely satisfying for him if he could solve some of the problems (in computer vision) he’s working on in next few years.

You can connect with Kiran on LinkedIn.

Trivia:

- The headquarters of Start Trek’s alliance shown in the movie were located inside IL&M campus.

- Pixar was IL&M's R&D group before it was sold to Steve Jobs.